SQL Tuning

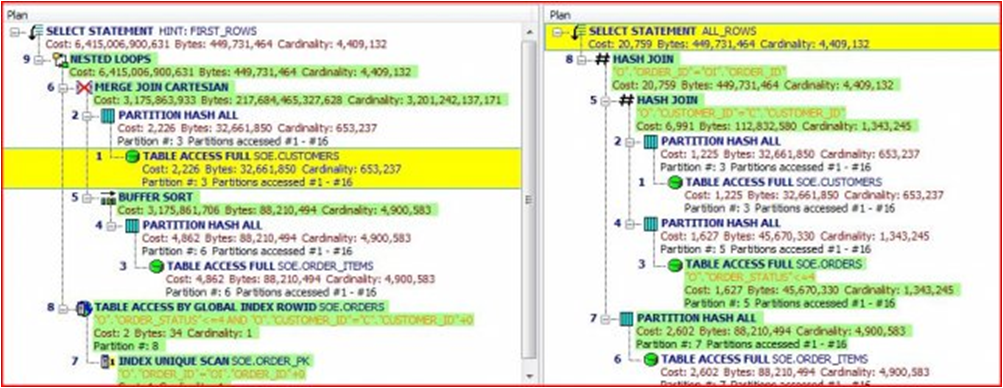

- The above graphic represents a query (in gray text) two with two possible execution plans being compared side by side. The textual approach on top has been the classic method. The method on bottom is the method introduced here using graphics and a step by step methodology.

Methodology

- 1. List tables in query

- 2. List the joins in the query

- 3. Layout a diagram tables as nodes

- 4. Draw connectors for each join in where clause

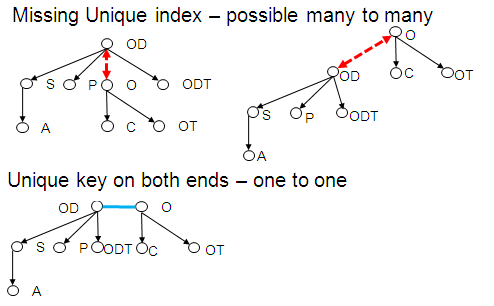

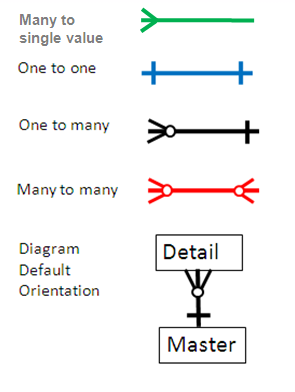

- 1 to 1 connection

- use blue line

- 1 to many

- use black line

- crows feet towards many

- many to many

- crows feet on both ends

- 5. Calculate filter ratios for any tables with filters

- filter ratio = number of rows returned with fitler / number of rows

- display in yellow below table node

- 6. Calculate two table join sizes for all two table joins in diagram (ie for every connection line)

- extract the join information, including filters, and run count on that join

- display in red on join line

- Alternatively, or as a complement, calculate join filter ratios in both directions

- display at each end of join connection line

- 7. Find the table sizes for each table

- display in green above table node

- Here is a query from Dan Tow's "SQL Tuning" which was the foundation for much of the ideas here:

- SELECT C.Phone_Number, C.Honorific, C.First_Name, C.Last_Name,

- C.Suffix, C.Address_ID, A.Address_ID, A.Street_Address_Line1,

- A.Street_Address_Line2, A.City_Name, A.State_Abbreviation,

- A.ZIP_Code, OD.Deferred_Shipment_Date, OD.Item_Count,

- ODT.Text, OT.Text, P.Product_Description, S.Shipment_Date

- FROM Orders O, Order_Details OD, Products P, Customers C, Shipments S,

- Addresses A, Code_Translations ODT, Code_Translations OT

- WHERE UPPER(C.Last_Name) LIKE :Last_Name||'%'

- AND UPPER(C.First_Name) LIKE :First_Name||'%'

- AND OD.Order_ID = O.Order_ID

- AND O.Customer_ID = C.Customer_ID

- AND OD.Product_ID = P.Product_ID(+)

- AND OD.Shipment_ID = S.Shipment_ID(+)

- AND S.Address_ID = A.Address_ID(+)

- AND O.Status_Code = OT.Code

- AND OT.Code_Type = 'ORDER_STATUS'

- AND OD.Status_Code = ODT.Code

- AND ODT.Code_Type = 'ORDER_DETAIL_STATUS'

- AND O.Order_Date > :Now - 366

- ORDER BY C.Customer_ID, O.Order_ID DESC, S.Shipment_ID, OD.Order_Detail_ID;

- SELECT C.Phone_Number, C.Honorific, C.First_Name, C.Last_Name,

Step 1 : Tables

- List tables in the query, for example from the query above

- Orders O,

- Order_Details OD,

- Products P,

- Customers C,

- Shipments S,

- Addresses A,

- Code_Translations ODT,

- Code_Translations OT

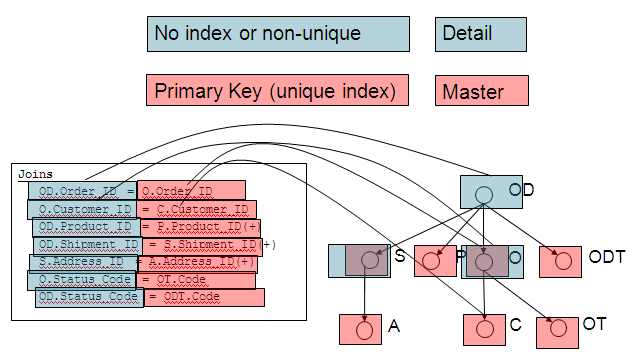

Step 2 : Joins

- Identify all the joins in the query, for example from the query above

- OD.Order_ID = O.Order_ID

- O.Customer_ID = C.Customer_ID

- OD.Product_ID = P.Product_ID(+)

- OD.Shipment_ID = S.Shipment_ID(+)

- S.Address_ID = A.Address_ID(+)

- O.Status_Code = OT.Code

- OD.Status_Code = ODT.Code

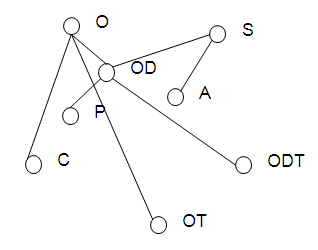

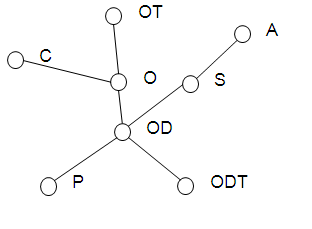

Step 3: Laying Out Join Diagram

- Lay out the tables in the query as nodes, with a line connecting each table that is joined.

- What kind of method do we have for laying out the join diagram?

- Laying the out willy nilly is mostly a mess:

- Laying the out in a balanced tree could be of some use:

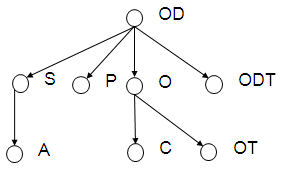

- but the real power comes in when we start laying them out with join relationships as our guide:

we put details above masters. Some masters are actually the detail for other masters.

This structure will first of all quickly indicate any schema irregularities such as Cartesian joins (no join) , implied Cartesian joins (two details for one master), many to many relationships(potential exploding joins) and one to one relationships(ok sometimes but worth questioning the reasoning).

OK, so the diagram tells me some nifty things about the schema and relationships but you say "that's a lot of work for just some nifty stuff especially if I have to do it by hand and it's not automated." well, first it is automated in DB Optimizer and second there's a lot more we can do with the VST diagrams. First of all the diagrams give us a sense of what's happening in the diagram and tells us how information about the impact will have on the running row set. The running row set is the amount of rows that result from a starting join and that we carry to the next table that we join and so on until we finish all the joins in the query. Joining up in the diagram typically expands the running row set where as joining down typically keeps running row set the same:

Step 4: Join Connectors

Step 5: Predicate Filter Ratio

- In order to find the optimal path through the join tree we need one other thing - the predicate filter ratio. The predicate filter ratio is the fraction of the table returned after applying any restrictions in the where clause other than the joins. For example:

- select v.green, f.yellow from vegetables v, fruit f where f.blue=v.blue and f.red='1000';

- the where clause phrase f.red='1000' is a predicate filter. The predicate filter ratio, or filter ratio is the

- (select count(*) from fruit f where f.red='1000') / (select count(*) from fruit)

- Basically the table with the most selective filter is where we want to start the query and we want to go to the next most selective.

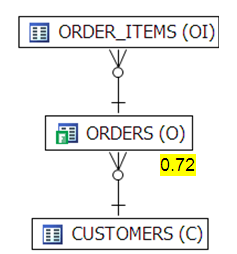

- Here is an example query from "swingbench" a load simulation tool for Oracle:

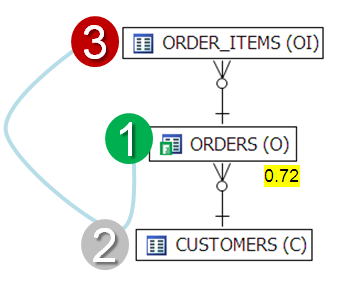

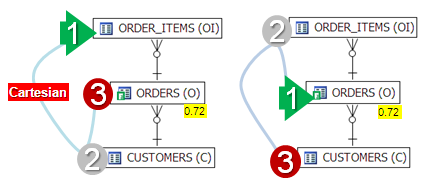

- It's clear that the execution path should be:

Because there is only one filter, the "0.72" on Orders. We join down from there to Customers then up to Order_Items.

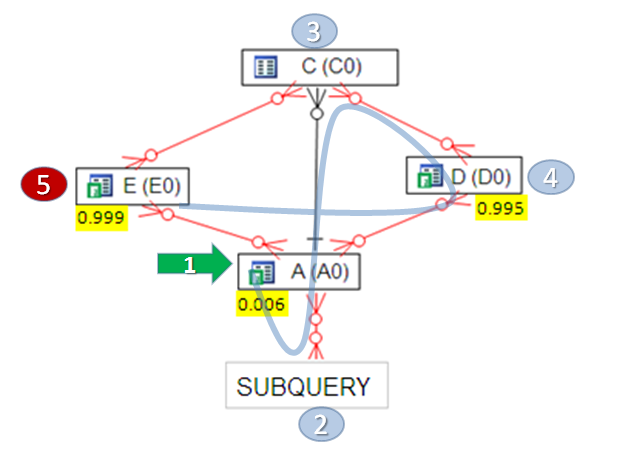

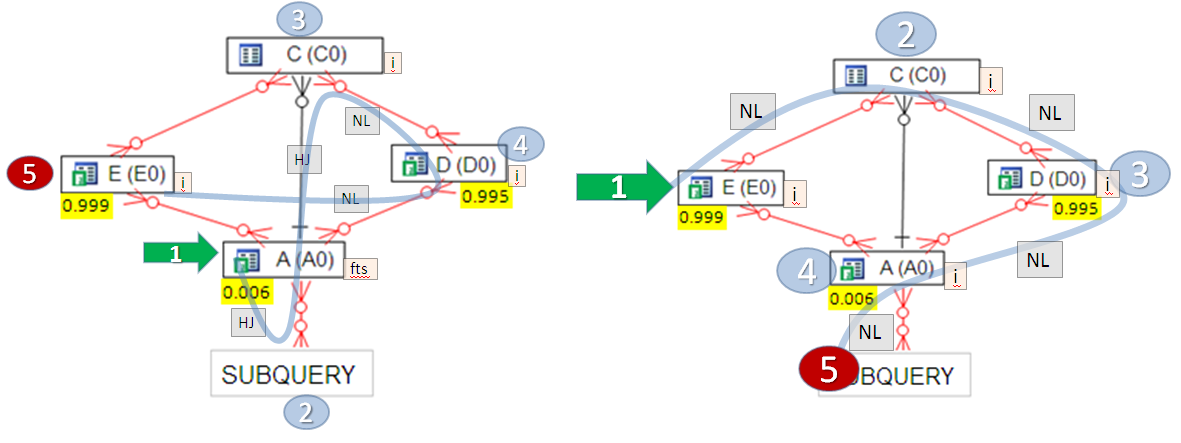

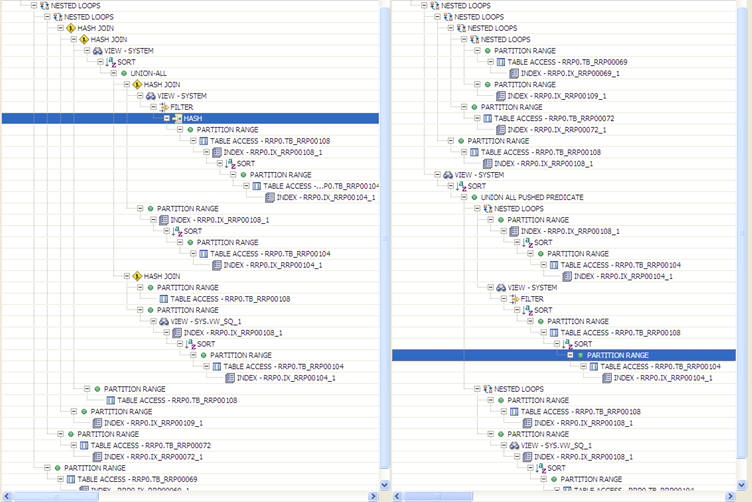

Now compare this to old style execution plans. Below is a comparison of two possible execution plans on the same query shown side by side:

First it difficult to read the information, second it takes time to load up enough of the information in to the mind and then play with it enough to understand what's happening and finally it's difficult to see whether these plans are realistic or not.

The above image shows the comparison of two alternative execution plans on a SQL statement. The text version on the left is the classic "explain plan" compared side by side. The right side is the same information but shown graphically. The Green Arrow labeled "1" is where the plan starts, followed by 2 then ending at 3. The joins are labeled "HJ" for hash join and "MJ" for merge join. The tables are ordered themselves not by execution order but by their relationship with details located above masters. The most important information is the join order. Of secondary interest is the join type, merge join, hash join or nested loops.

What I'm working on right now is making Explain Plans easier. Compare the textual explain to the graphic one. The graphic is so much easier. At the font size the text version is impossible top read. Even if it was big enough, the text would still take longer and more brain CPU cycles to understand. The graphic on the right tells me the most important information to optimally execute the query. The query will be optimally executed by starting on Orders, since it is the only table with a filter on it represented by both the little green F 9we will increase the size in the next version of DB Optimizer to make it more obvious) and the highlighted yellow "0.72". The value "0.72" is the filter ratio, or the fraction of rows returned after applying the query filters on this table. The filter "0.72" is the only filter ratio in the diagram, but if there was another filter ratio, we would pick the most selective one as the table to start are query, but in this case there is only one filter. From that filter we always join down into mater tables (master tales have the cross end of the join line - the crows feet represents detail table). By joining into a master table we keeps the same size of the "running row set" . The "running row set" the internal number of rows that are carried from one join to another as we join into each table in the execution plan. Can you imagine having carry boxes to 3 people in the office but depending on the order you visited the 3 people you either got to drop off boxes or you had to pick up boxes. Then the easiest most effortless path would be the path where you got rid of the most boxes first and picked up the least number of extra boxes along the way. The number of boxes you have to carry is equivalent to the running row set. For example in the above executions paths that are being compared, the one on the left does a join between ORDER_ITEMS and CUSTOMERS but there is no join so instead of getting rid of boxes we multiply - we do a Cartesian join. Thus this plan is not worth pursuing. The second plan is not worth pursuing because it joins from a master into a detail again increasing running row count prematurely - not as bad as a Cartesian but still worse than joining down to the master.

Step 6: Two Table Join Sizes and Join Filters

- Join diagrams with the relationships and predicate filter ratios can take us a long ways to finding the optimal path, but to relay nail down the optimal path it takes some extra information, especially in the case where the joins are many to many and the structure of the join diagram.

- What we want is information about the result set sizes of the table joins in the diagram.

- The structure of the diagram will give us information on the maximum possible result set sizes.

- It's generally easy to find the rows in a table and the number of distinct values (ndv) for a table and it's joining columns.

- Depending on how big your tables are and how much time you have to run analysis of the tables, probably the easiest thing to do is just extract the sql code for each pair of tables that is joined and run a "count(*)" on that join.

- If the tables are large and you don't have much time, you can get good estimates from the rows in the table and the number of distinct values in the joining columns.

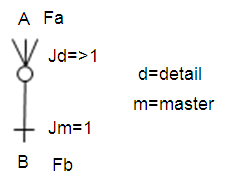

- Another way to look at it, that Dan Tow uses, is to look at the join filters.

- Where Jd, ie join detail, is the average number of rows returned when join in a for from the master.

- Likewise Jm is the master join ratio or the average number of rows that are returned joining from the child into the master. Generally speaking the Jm will be 1 in the case of PK/FK relations, though it might be smaller for unique indexes or unique constraints on the master join column instead of PK/FK.

- In rare cases if the join into the the detail can return less than 1 on average, then in this case, it is just as good to join up to the "detail" as down to the master.

- NOTE: for minimum values returned, unless there is a constraint, a join can return 0 rows. A PK/FK relation will constrain that there be a parent(master) for each child(detail).

- All and all, I think the easiest route is just to display the join set sizes from each two way join.

Step 7: Table Sizes

- Finally, and maybe actually least, we can display the number of rows in a the tables in the query.

Analyzing Results

Query 1

- The following query ran for more than a day before I killed it : SQL Query Text Q1

- I ran it through Quest's SQL Tuner to see if I could find a better plan, but the basic cases too so long I could get very far.

- Then I tried to analyze it with DB Optimizers VST diagrams.

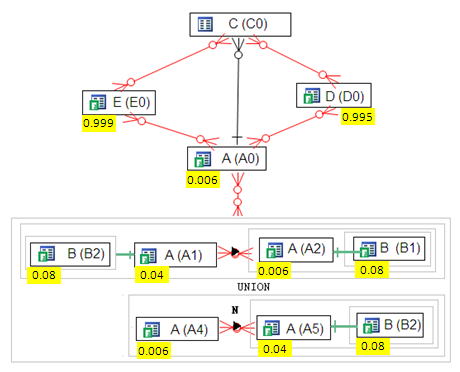

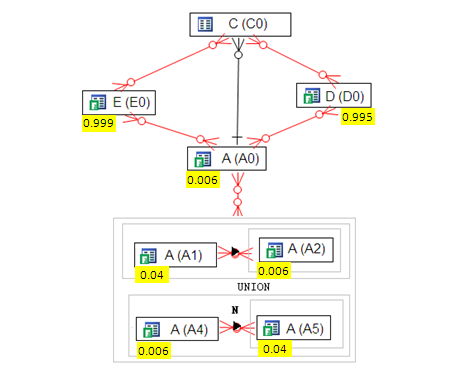

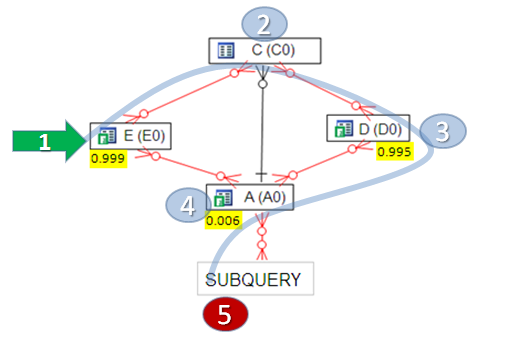

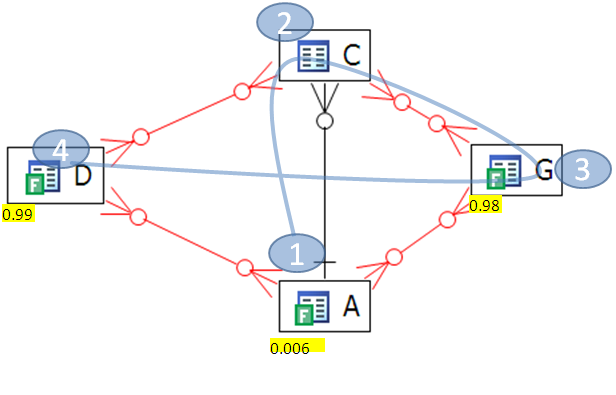

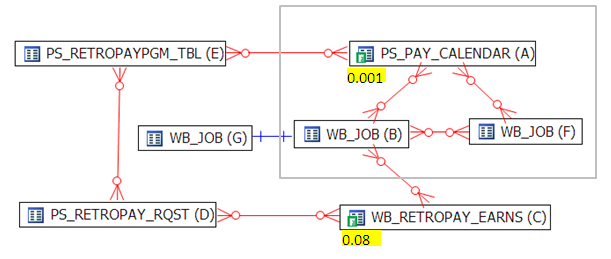

- Laying it out in a Visual SQL Tuning (VST) diagram gives:

- Immediately we can notice some unusual things about the query such as table A is used 5 times and table B is used 3 times, but I analyzed this query without know what, why or how the had made it. The diagram in it's default state has a fair bit going on, but we can eliminate a lot. For starters we can eliminate all the

- Type joins. In these cases, the table B has a subquery on it (denoted by the gray box around it) and only one row is being selected. In this case the select on B acts as a filter on A, so we can just eliminated it from the diagram as it won't change the actual execution plan because it can't be merged and it has to be run before we can select on A. Thus let's eliminate all the selects on B of this type giving us:

- Now we can go farther simplifying the query because UNION has to be executed before joining into the rest of the query. Oracle, as of now, AFIK, has no way of merging a UNION inot the main query (I ran this by Benoit at Oracle who confirmed)

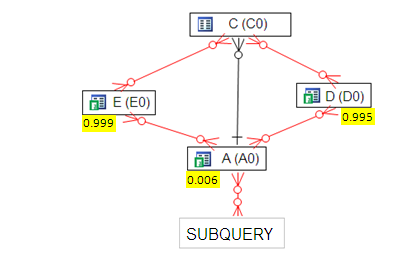

- But the UNION doesn't have that much interesting going on. We have an outer join between A1 and A2 and a NOT EXISTS between A4 and A5. We can vary how we do these joins, but the big question of join Order is mute because there are only two tables. Thus we can farther simplify the query as:

- Now the question is where to start the join. It's pretty clear that we start at A (A0) because it has the most selective filter returning only 0.006 of the table. We can then join to C (C0) to play it safe. To know where to go from here we have to have more information, but with this starting point we have made the most important decision in the execution plan.

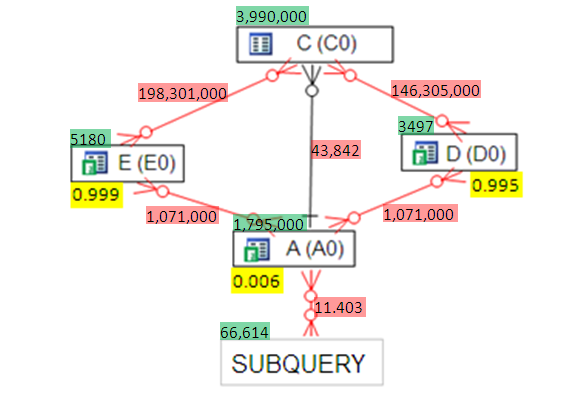

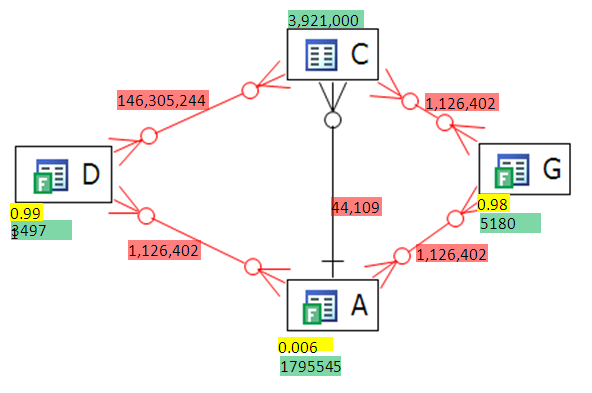

- Going farther in our analysis, lets look at TWO TABLE JOIN sizes

- Light green is the table sizes which aren't need for the analysis but are interesting for discussion.

- Light red is the TWO TABLE JOIN (TTJ) sizes.

- From the TTJ sizes we can see it's a horrible idea to join (E,C) or (D,C) at the start of the query.

- On the other hand the best join is (A,SUBQUERY) the to C.

- We end up with a JOIN PATH like:

- Oracle's default path was

- Which is clearly wrong because it starts with the worst join possible.

- Oracle's default path took over a day, where as the new path took 30 seconds.

- We can compare the plans side by size which even at the small size above enables the viewer to see some big differences.

- where as the text comparison is quite difficult and tedious.

Query 2

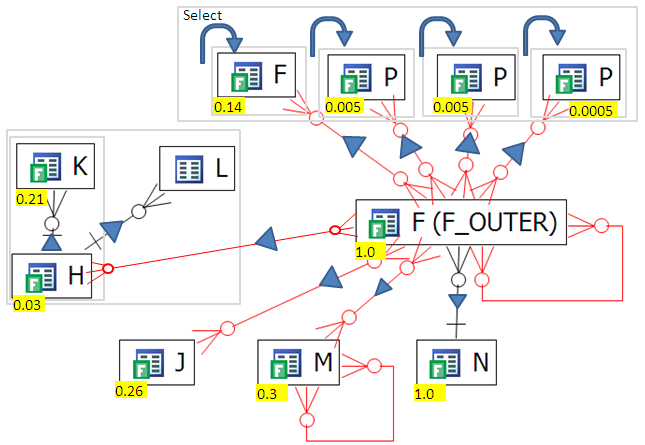

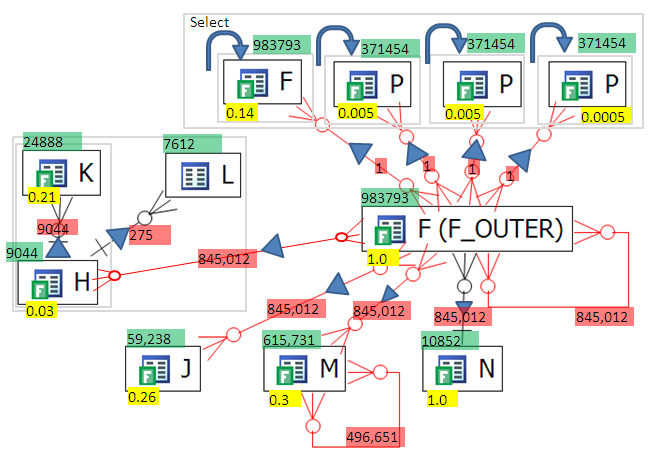

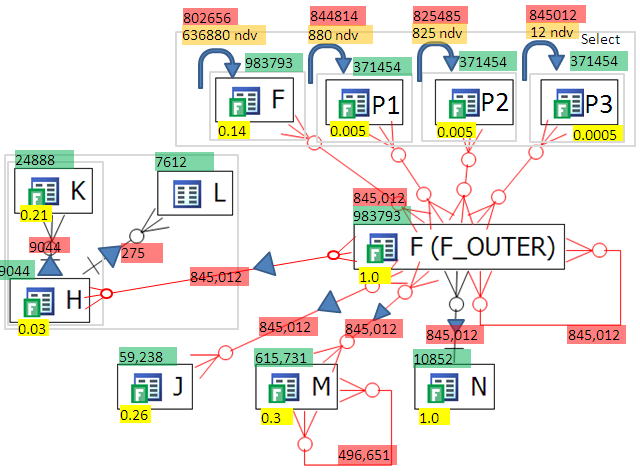

- The VST diagram looks like

- There are two interesting things about this diagram.

- Every thing is OUTER JOINED to F_OUTER

- There are correlated subqueries in the select

- There are two things to check - what is the effect of the OUTER JOINS. The OUTER JOINS can easily mean that all joins into F_OUTER don't change the result set size. Let's confirm by looking at the TTJ sizes:

- The only thing that bounds the number of rows returned by F_OUTER is it's self join (the line going in and out of F_OUTER) on the bottom left of F_OUTER.

- What this means is that it doesn't matter what order we join tables into F_OUTER.

- Now we can turn to the other interesting thing about the query. There are 4 correlated subselects in the select clause. These queries in the select clause are called "scalar subqueries." These scalar subqueries can be a bad idea or a good idea depending on how mainly on how many distinct values are used as input into the them.

AFAIK, Oracle doesn't merge subqueries in the select, and certainly not the ones in this query because they are embedded in cases statements - looks like I might have spoken too soon! more research to do but looks like Oracle can merge these scalar subqueries even with the case statement. I will try to run some more tests . To be continued)

- In the worst case scenario the scalar subqueries are going to be run 845,012 times - that is one heck of a lot of work which would be a BAD IDEA.

Looking at the above diagram, and the 4 scalar subqueries in the select clause, the top red number is how many times the scalar subquery will be run (based on the case statement in the select clause) and the orange highlight is how many distinct values will be used in the scalar subquery where clause. P3 will benefit for scalar subquery caching but F won't because there are too many distinct values. On the other hand for P1 and P2 could if there are no collisions (see CBOF p216-217) and the scalar subquery caching is actually supports 1024 values. ( see http://www.oratechinfo.co.uk/scalar_subqueries.html#scalar3 ) for a great write up on analysis of scalar subquery caching - the analysis on this page seems to show that caching maxes out way before 1024 but that might be because of collisions)

The subqueries in the select clause look like

- select CASE WHEN F.f1 IS NULL

- THEN NULL

- ELSE (SELECT X.f2

- FROM X

- WHERE code_vl = F.f2)

- END AS f0

- from F;

and could be merged into the query like:

- select CASE WHEN F.f1 IS NULL

- THEN NULL

- ELSE ( X.f2)

- END AS f0

- from F , X

- where code_vl(+) = F.f1;

( NOTE: the first query will break if the correlated sub query returns more than one value where as the second query will return the multiple rows.)

The VST diagram can't tell you that this is the solution, but they can point out that the place to spend your time is the subqueries in the select.

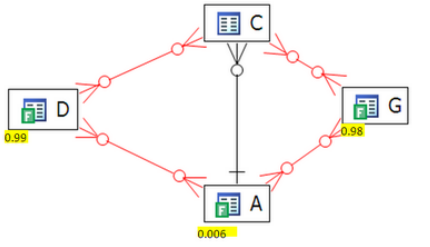

Query 3

- see text at : [Query 3]

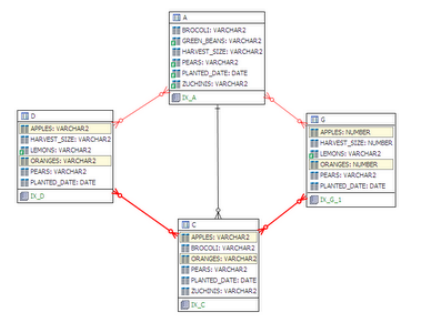

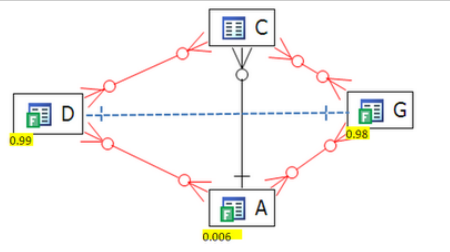

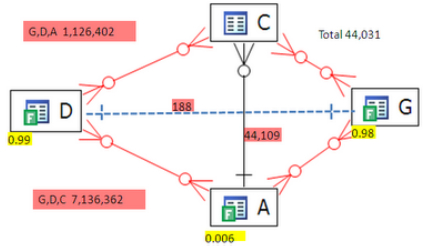

- This is a simple query compared to Query 1 and Query 2. The diagram looks like

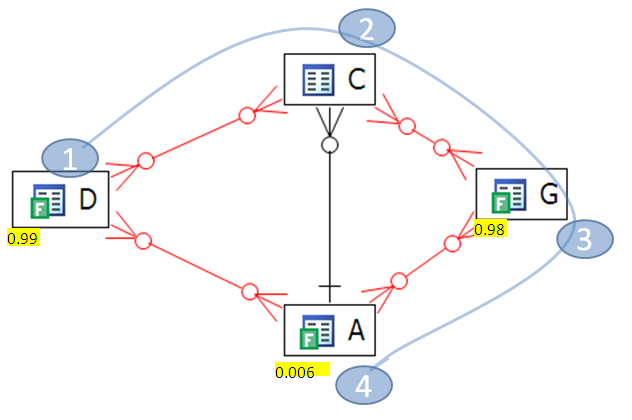

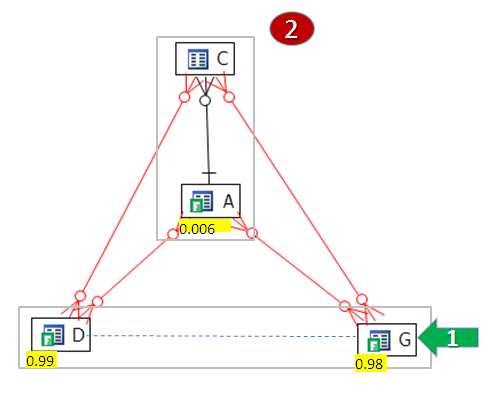

- By default Oracle chooses this path:

- As we can clearly see, this is the wrong path. Why? Because, as previously blogged about, Oracle starts the join on table D who has a non-selective filter returning 0.99 of the table. A more optimized path would be

- We start at the table with the most restrictive filter, table A, whose filter returns 0.006 of the table. This path is much better than the first path.

- 1. First Path 4.5 secs, 1M logical reads

- 2. Second path 1.8 secs 0.2M Logical reads

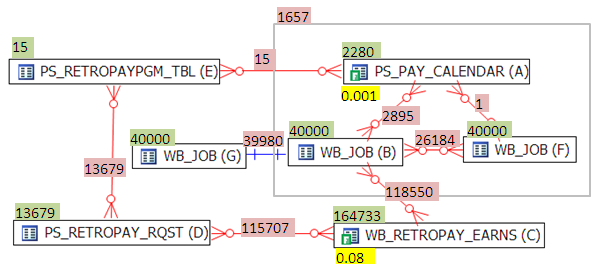

- Let's look at the extended VST stats on the diagram to confirm our ideas:

- Green is Table size

- Yellow is Filter Ratio

- Red is the Two Table Join Size

- It's clear that the first join should be (A,C) because it give us the smallest running row count at the beginning of the execution path. (yellow is filter ratio, green is rows in table and red is the resulting join set size between tables including using the filters)

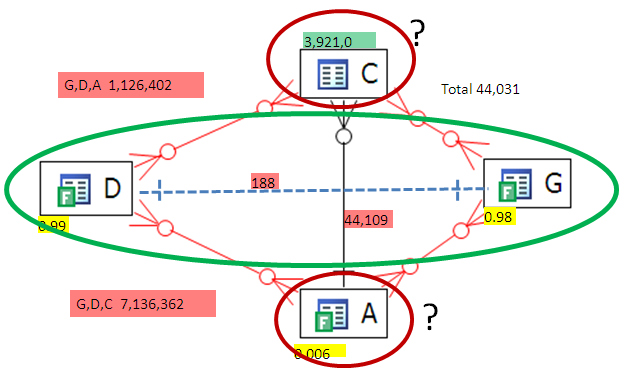

- Now let's look at the query more closely. There is actually a connection from G to D that we can get through transitivity. Here we can see the transitivity (the yellow highlighted fields are the same in D, G and C).

- D.apples=C.Apples and C.Apples=G.Apples

- same can be said for oranges, so our VST diagram actually looks like:

- The transitivity brings to light a lurking ONE-to-ONE relationship between D and G! Thus a join between D and G will return at most min (D,G) rows and maybe less. Let's see what the row counts are:

- Check it out! the join set between G and D is only 188 rows!!

- But if we join G to D, then where do we go? Remember Oracle can only join to one other object, either C or A. Looking at the join set sizes (G,D)C is 7M ! and (G,D)A is 1M !

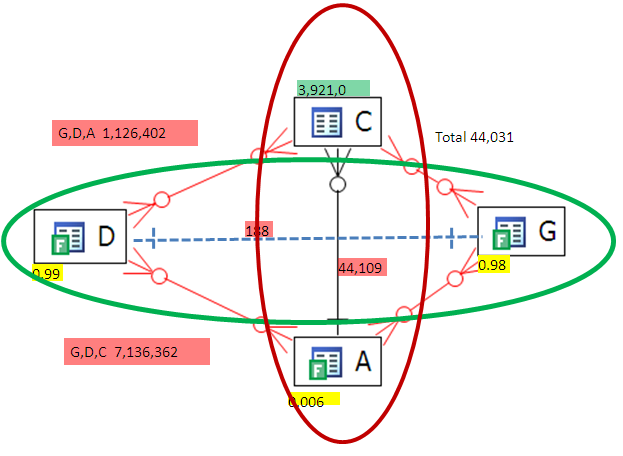

- A big result sets where as the result form A to C is only 44K. How can we take advantage of the low result set from (G to D) and (A to C) at the same time? The answer is to make subqueries that force Oracle to evaluate them separately and then join the results sets:

- SELECT * FROM

- (

- SELECT /*+ NO_MERGE */ c.apples, c.oranges, a.harvest_size

- FROM a, c

- WHERE

- a.planted_date = TO_DATE ('02/10/2008', 'dd/mm/yyyy') AND

- .pears = 'D' AND a.green_beans = '1' AND

- a.planted_date = c.planted_date AND

- a.pears = c.pears AND

- a.zuchinis = c.zuchinis AND

- a.brocoli = c.brocoli AND

- a.zuchinis = '0236'

- ) X,

- (

- SELECT /*+ NO_MERGE */ d.apples, d.oranges, d.harvest_size

- FROM d, g

- WHERE

- d.planted_date = TO_DATE ('02/10/2008', 'dd/mm/yyyy') AND

- g.planted_date = TO_DATE ('02/10/2008', 'dd/mm/yyyy') AND

- g.apples = d.apples

- AND d.oranges = g.oranges

- AND d.pears = 'D' AND g.pears = 'D'

- AND g.pears = d.pears

- AND g.harvest_size = d.harvest_size

- AND (d.lemons = 0 OR d.lemons IS NULL) AND

- (g.lemons = 0 OR g.lemons IS NULL)

- )

- Y WHERE

- X.oranges = Y.oranges AND

- X.apples = Y.apples AND

- X.harvest_size = Y.harvest_size;

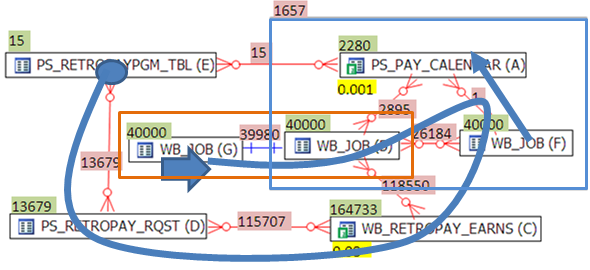

- The VST looks like

- It doesn't really matter where we start. We join (G,D) and separately we join (C,A) then we join these two result sets.\

- This final version runs in

- elapsed 0.33 secs and 12K logical reads

- down from an original

- elapsed 4.5 secs and 1M logical reads

- Tuning this query manually by an average developer or DBA would take hours., an expert could maybe do it in less than an hour, and a junior analyst might not be able to tune it at all. On the other hand the query can be tuned in minutes with VST diagrams which include join filters, 2 table result set sizes and analysis of transitivity.

- The VST relies filter ratios and 2 table join result set sizes works well. For queries where even the 2 table results take too long, such as a multi-day data warehouse query there are other ways to estimate the join result set and filter ratios. We will explore some of these methods in future posts.

Query 4

- With this diagram we have a good idea of the join path. Start at A which has the most selective filter then join to C by the shortest path with is B. This join path (A,B,C) lays the foundation for a good join path.

- The actual best path is A,F,B,G, C, D, E but to know that we we need more information which is the TTJ sizes.

- It's helpful to know the query returns about 2000 rows (which are summed and grouped by to output one row) so we should be able to prune the "running row count" down and keep it down.

- Start at A (highest filter ratio)

- Join to F (only 1 row, doesn't change running row set size )

- Join to B (got to go to B to get to C )

- Join to G (minor filtering)

- Join to C (next best filter ratio)

- Join in D and E at the end, looks like they are translation joins

- For some reason ( I think it's a bug ), Oracle joins A to F before applying the filter on A and makes a view that it then uses to join to B. The problem is this view on A to B is expensive before the filter on A is applied because it means a join of 2280 to 40,000 rows, whereas after applying the filter to A, we have only 1 row to join into F returning 1 row.

- Default plan

- Optimized plan

Subquery Diagramming

Simple join

select * from a, b where b.f=a.f

Non-correlated subquery is basically the same

select * from a,

(select b.f1 from b where b.f2=10)

c where c.f1=a.f1

what about aggregate non-correlated sub-queries. They aren't exactly the same since they are more difficult rewrite as a regular join:

select ename from emp, (select deptno from emp group by deptno having count(*) > 1) a where a.deptno=emp.deptno;

Single row subquery

Special case, where non correlated subquery only returns one row

select * from a where a.f1 =

(select max(b.f1) from b )

(laid out side by side because it acts as a filter not a master detail relationship)

select * from a where a.sal >

(select avg(sal) from b where b.f2=a.f2)

Alternatively an arrow could be included to indicate that the subquery could be run for every row returned in the out query

Is there a need to distinguish between aggregated correlated sub-queries and just correlates sub-queries.

Example

SELECT

A.COMPANY

, A.PAYGROUP

, E.OFF_CYCLE

, E.SEPCHK_FLAG

, E.TAX_METHOD

, E.TAX_PERIODS

, C.RETROPAY_ERNCD

, sum(C.AMOUNT_DIFF) SUM_AMOUNT

from PS_PAY_CALENDAR A

, WB_JOB B

, WB_RETROPAY_EARNS C

, PS_RETROPAY_RQST D

, PS_RETROPAYPGM_TBL E

where A.RUN_ID = 'PD2'

and A.PAY_CONFIRM_RUN = 'N'

and B.COMPANY = A.COMPANY

and B.PAYGROUP = A.PAYGROUP

and E.OFF_CYCLE = A.PAY_OFF_CYCLE_CAL

and B.EFFDT = (SELECT

/*+ qb_name(wb_hj) */

MAX(F.EFFDT)

from WB_JOB F

where F.EMPLID = B.EMPLID

and F.EMPL_RCD# = B.EMPL_RCD#

and F.EFFDT< = A.PAY_END_DT)

and B.EFFSEQ = (SELECT MAX(G.EFFSEQ)

from WB_JOB G

where G.EMPLID = B.EMPLID

and G.EMPL_RCD# = B.EMPL_RCD#

and G.EFFDT = B.EFFDT)

and C.EMPLID = B.EMPLID

and C.EMPL_RCD# = B.EMPL_RCD#

and C.RETROPAY_PRCS_FLAG = 'C'

and C.RETROPAY_LOAD_SW = 'Y'

and D.RETROPAY_SEQ_NO = C.RETROPAY_SEQ_NO

and E.RETROPAY_PGM_ID = D.RETROPAY_PGM_ID

group by A.COMPANY

, A.PAYGROUP

, E.OFF_CYCLE

, E.SEPCHK_FLAG

, E.TAX_METHOD

, E.TAX_PERIODS

, C.RETROPAY_ERNCD

/

I originally diagram this as

but the subqeries are really only on G and F and the correlate with tables in the outside query:

Outer Joins

If English and French both have a unique key on the "ordinal_id" then it's basically one-to-one relationship

We add an arrow in the middle of the line to denote "outer join". The arrow points from the table that drives the join, ie all the rows in the table pointed from are returned even if a match isn't found in the table pointed to.

above graphic originally on http://blog.mclaughlinsoftware.com/oracle-sql-programming/basic-sql-join-semantics/

type ANSI ANSI 89 (Oracle) type type

inner join english INNER JOIN french

using (ordinal_id) english e, french f

where e.ordinal_id=f.ordinal_id

left outer join english LEFT JOIN french

using (ordinal_id) english e, french f

where e.ordinal_id=f.ordinal_id(+)

right outer join english RIGHT JOIN french

using (ordinal_id) english e, french f

where e.ordinal_id(+)=f.ordinal_id

full join english FULL JOIN french

using (ordinal_id)

english e, french f

where e.ordinal_id=f.ordinal_id(+)

UNION

english e, french f

where e.ordinal_id(+)=f.ordinal_id

In/Exists

Semi Joins

SELECT *

FROM dept d WHERE exists ( SELECT null FROM emp e WHERE e.deptno=d.deptno);

SELECT *

FROM dept d WHERE d.deptno in ( SELECT deptno FROM emp e );

Not/Not Exists

SELECT *

FROM dept d WHERE not exists ( SELECT null FROM emp e WHERE e.deptno=d.deptno);

SELECT *

FROM dept d WHERE d.deptno not in ( SELECT deptno FROM emp e );